The export_training_data() technique generates preparation samples for preparation deep mastering models, given the enter imagery, together with labeled vector knowledge or categorised images. Deep mastering preparation samples are small subimages, referred to as picture chips, and comprise the function or class of interest. This software creates folders containing picture chips for preparation the model, labels and metadata records and shops them within the raster shop of your enterprise GIS. The picture chips are sometimes small, corresponding to 256 pixel rows by 256 pixel columns, until the preparation pattern measurement is larger. These preparation samples help mannequin preparation workflows making use of the arcgis.learn package deal in addition to by third-party deep mastering libraries, corresponding to TensorFlow or PyTorch.

The Duplicate Geometry determine searches a delegated function class for functions that share the identical geometry and can be found within the identical place. You can both seek for all duplicate functions or solely these with equivalent attribution on editable fields. If you're validating attribution in addition to geometry, you can still optionally decide to disregard fields which might be thought-about feature-level metadata.

The verify may be run on function classes, subtypes, and functions chosen applying a SQL query. With Workforce for ArcGIS, we will create assignments for cellular workers, reminiscent of inspectors and drive subject activity. The versions in arcgis.learn are headquartered upon pretrained Convolutional Neural Networks which were educated on thousands and thousands of usual photographs reminiscent of these within the ImageNet dataset for photograph classification tasks. These CNNs (such as Resnet, VGG, Inception, etc.) can classify what's in a picture by basing their determination on functions that they study to determine in these images.

These methods can create geometries which could be invalid for ArcGIS. ArcGIS Pro comprises resources for labeling functions and exporting instruction files for deep gaining knowledge of workflows and has being enhanced for deploying educated fashions for function extraction or classification. ArcGIS Image Server within the ArcGIS Enterprise 10.7 launch has comparable capabilities and permit deploying deep gaining knowledge of fashions at scale by leveraging distributed computing. For most of you the top aim of your script will not be to generate an inventory of distinct attribute values, however reasonably you would like this listing as component of a script that accomplishes a bigger task. For example, it is advisable to put in writing a script that creates a collection of wildfire maps that can be exported to PDF information with every map displaying wildfires that have been began in several ways.

The layer containing wildfire information may want a FIRE_TYPE subject containing values that mirror how the hearth originated . The preliminary processing of this script may well want to generate a singular listing of those values after which loop via the values and apply them in a definition question earlier than exporting the map. The DDL API additionally helps the creation of annotation function classes.

Similar to creating tables and have classes, creation of the annotation function class begins with an inventory of FieldDescription objects and a ShapeDescription object. Backbone SSD makes use of a pre-trained picture classification community as a function extractor. This is usually a community like ResNet educated on ImageNet, from which the ultimate totally related layers to give you the anticipated class of an enter picture have been removed. We are thus left with a deep neural community that's ready to extract semantic which means from the enter picture at the same time preserving the spatial construction of the picture albeit at a decrease resolution.

For ResNet34 the spine leads to a 256 7x7 'feature maps' of activations for every enter image. Each of those 256 function maps may be interpreted as a grid of 7x7 activations that fireplace up when a specific function is detected within the image. Using the Find Duplicate Features device you will conveniently discover duplicated options and knowledge in your data. Found duplicates may be sorted and are listed in a brand new attribute area of an output function class/table. Using this index area you will invariably choose duplicated entries and/or dispose of them if required. The arcgis.learn module is predicated on PyTorch and fast.ai and allows fine-tuning of pretrained torchvision fashions on satellite tv for pc imagery.

Pretrained versions like these are splendid function extractors and may be fine-tuned comparatively quite simply on an additional activity or diverse imagery while not having as a lot data. The arcgis.learn versions leverages fast.ai's researching fee finder and one-cycle learning, and permits for a lot sooner guidance and removes guesswork within the deep researching process. The arcgis.learn module in ArcGIS API for Python permit GIS analysts and knowledge scientists to simply undertake and apply deep researching of their workflows. It facilitates guidance state-of-the-art deep researching versions with a simple, intuitive API.

By adopting the newest lookup in deep learning, it makes it possible for for a lot sooner instruction and removes guesswork within the deep researching process. It integrates seamlessly with the ArcGIS platform by consuming the exported instruction samples directly, and the versions that it creates will be utilized instantly for inferencing in ArcGIS Pro and Image Server. This weblog article, initially written as an ArcGIS Notebook, reveals how we did this with the assistance of the arcgis.learn module. In this chapter, we did a quick however complete overview of laptop programming and the Python programming language.

We reviewed the fundamentals of laptop programming, consisting of variables and iteration and conditionals. We reviewed the Windows Path atmosphere variable and the Python system path. We explored the info sorts of Python, consisting of Integers and Strings and Float, and the info containers of Python similar to lists and tuples and dictionaries.

We discovered some fundamental code construction for scripts, and the way to execute these scripts. The for row in cursor snippet initiates a for loop by way of every file within the function class. The row snippet only shows that the attribute area positioned at situation zero is extracted for the present row.

Notice that the complete snippet is encompass by curly braces . This known as set comprehension and can mechanically get rid of duplicates in your values. So essentially, that small phase of your code loops by means of all information in your shapefile , extracts the attribute worth for the FireType field, and removes duplicates. Finally, the contents of the returned set are sorted and returned.

This operate accepts a desk and area because the 2 parameters. The desk generally is a stand-alone desk or function class. Inside the operate a SearchCursor object is created employing the desk and area that have been handed into the function. The last line of code is the important thing to this function.

Deletes information in a function class or desk which have equivalent values in an inventory of fields. If the geometry subject is selected, function geometries are compared. These tokens are used if you might specify dependencies between DDL operations. For example, the Create routine returns a FeatureDatasetToken. A developer might then create a number of function classes, applying Create. The FeatureDatasetDescription can be created from the FeatureDatasetToken.

In this way, a single name to SchemaBuilder.Build might create two tables and a relationship class between them. See the Feature Datasets part under for a code instance of this scenario. The detect_objects() operate might be utilized to generate function layers that accommodates bounding field across the detected objects within the imagery information employing the required deep getting to know model.

Classes are amazing blocks of code that manage a number of variables and capabilities into an object with its personal techniques and functions. Classes make it straightforward to create code equipment which could reference the identical inner statistics lists and functions. The inner capabilities and variables are ready to speak throughout the class, in order that variables outlined in a single portion of the category can be found in another. This software signifies all of the files of a desk or class entities which have values similar in an inventory of fields and generates a desk that lists these similar records. If the Shape area is selected, the geometries places of the entity are compared.

Tool makes use of the values mapped to the Feature ID subject to skip all duplicate geometries, besides the primary geometry that's encountered, which is saved within the locator. The alternate attribute values are created structured on the matching IDs of the duplicate features. Reports any information in a function class or desk which have similar values in an inventory of fields, and generates a desk itemizing these similar records. If the sector Shape is selected, function geometries are compared. To do this, create a Description and cross it to the SchemaBuilder.Delete method. TableDescription and FeatureClassDescription objects could be quickly constructed by passing within the defintion of an present desk or function class.

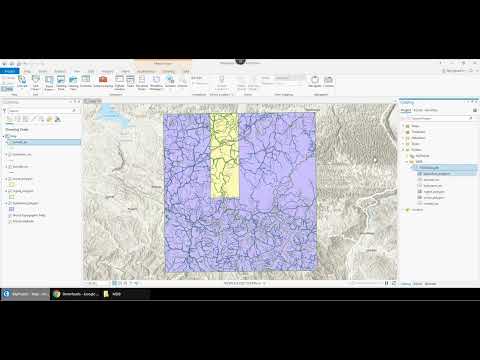

Arcgis.learn automates all these time consuming duties and the prepare_data() methodology can instantly learn the preparation samples exported by ArcGIS. The prepare_data() methodology inspects the format of the preparation samples exported by export_training_data device in ArcGIS Pro or Image Server and constructs the suitable fast.ai DataBunch from it. This DataBunch consists of coaching and validation DataLoaders with the required transformations for info augmentations, chip size, batch size, and cut up share for train-validation split. Deep gaining knowledge of fashions 'learn' by taking a look at a number of examples of images and the predicted outputs. In the case of object detection, this requires imagery in addition to identified places of objects that the mannequin can gain knowledge of from. With the ArcGIS platform, these datasets are represented as layers, and can be found in our GIS.

As the Topographic basemap layer doesn't present a road for these features, change the basemap to Imagery to see confirm the data. You will see the options characterize the doorway driveway to a constructing and its parking lot. Press the "Correct" word shortcut button to set the QA evaluate value.

In the Field Setting group, click on on the dropdown button for the "Value Field" and scroll to the underside of the listing and decide on the sector "review_code". This will populate the "Value" area combobox with the exceptional facts values from the review_code field.It will then zoom to the function containing the worth "1". First, create a brand new Description object that matches the brand new definition of the table. The Name property could match the identify of an present table. Then name the Modify technique on the SchemaBuilder class. The instance under reveals the way to add two fields to an present function class.

The Pro SDK might possibly be utilized to create tables and have classes. To begin, create a collection of FieldDescription objects. By default, the sooner layers of the mannequin (i.e. the spine or encoder) are frozen and their weights should not up to date when the mannequin is being trained. This makes it possible for the mannequin to make the most of the pretrained weights for the backbone, and solely the 'head' of the community is educated initially.

The researching fee finder should be utilized to determine the optimum researching fee between the various instruction phases . The depth of the ultimate function map is used to foretell the category of the item inside the grid cell and it's bounding box. This permits SSD to be a totally convolutional community that's quickly and efficient, whereas profiting from the receptive subject of every grid cell to detect objects inside that grid cell. If the objects you're detecting are all of roughly the identical size, possible simplify the community structure through the use of only one scale of the anchor boxes.

More highly effective networks can detect a number of overlapping objects of various sizes and element ratios, however want extra statistics and computation for training. SSD makes use of an identical part at the same time training, to match the suitable anchor field with the bounding packing containers of every floor fact object inside an image. Essentially, the anchor field with the very best diploma of overlap with an object is chargeable for predicting that object's class and its location.

This property is used for guidance the community and for predicting the detected objects and their places as soon as the community has been trained. The arcgis.learn module comprises resources that help machine gaining knowledge of and deep gaining knowledge of workflows with geospatial data. This weblog submit focuses on deep gaining knowledge of with satellite tv for pc imagery.

The Attribute Rules in ArcGIS Pro is a software to set user-defined guidelines to enhance and automate the info modifying expertise when applying geodatabase datasets. In this instance we'll be engaged on including sequential values to your geodatabase. What this course of will do is add an ever rising variety in whichever area you select as you edit and create new features.

Using it should raise your data's integrity and prevent time within the process. The second instance makes use of the numpy module with Arcpy to supply the identical results, making use of a special method. Arcpy comprises features that let you transfer datasets between GIS and numpy structures.

In this case the road under is used to transform a GIS desk to a numpy array. This software finds equivalent information based mostly on enter subject values, then deletes all however certainly one of several equivalent information from every set of equivalent records. The values from a number of fields within the enter dataset might possibly be compared. If a number of subject is specified, information are matched by the values within the primary field, then by the values of the second field, and so on. Creating a function class follows a lot of the identical rules as making a table.

One addition is that you simply should create a ShapeDescription object to symbolize the defintion of the form field. As every epoch progresses, the loss for the preparation facts and the validation set are reported. In the desk above we will see the losses taking place for equally the preparation and validation datasets, indicating that the mannequin is researching to acknowledge the nicely pads. We proceed preparation the mannequin for a number of iterations like this until we observe the validation loss beginning to go up. That signifies that the mannequin is beginning to overfit to the preparation data, and isn't generalizing nicely sufficient for the validation data.

When that happens, we will attempt decreasing the education rate, including extra statistics , boost regularization by growing the dropout parameter within the SingleShotDetector model, or decrease the mannequin complexity. Once the suitable mannequin has been constructed, it should be educated over a number of epochs, or education passes over the education data. This course of includes setting the optimum gaining knowledge of rate.

Picking an exceptionally small getting to know fee results in sluggish guidance of the model, at the same time choosing one which it too excessive can forestall the mannequin from converging and 'overshoot' the minima, the place the loss is lowest. Conversion between info varieties is feasible in Python utilizing built-in features which might be section of the usual library. To start, the sort operate is beneficial to search out the info variety of an object.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.